Horizontal Scaling

Horizontal scaling can be achieved by balancing the load between many instances of each MPC node in the Builder Vault TSM. This allows for improved throughput and fail-over. We support three different types of horizontal scaling of the Builder Vault TSM:

- Replicated TSM Allows for the most efficient scaling, but it is visible from the outside (i.e., from the SDKs) that there are multiple TSMs. And to scale up/down, you need to add/remove all of the node instances in a TSM instance at the same time.

- Replicated MPC Nodes with Direct Communication If you have a TSM where the MPC nodes are configured to communicate with each other using direct TCP connections, you can enable horizontal scaling in the MPC node configuration. This allows you to run multiple instances of each node behind a load balancer. To the outside, this looks like a single TSM instance, but you can add and remove instances of each node. This can be done individually for each node. There is some overhead with this setup, because the MPC node instances have to do internal rounting.

- Replicated MPC nodes with Message Broker If your MPC nodes use a message broker, such as AMQP or Redis, to communicate with each other, you can also enable horizontal scaling. As in the case with direct communication, this allows you to add or remove individual MPC node instances independently behind a firewall.

The three options are explained in more detail below. Contact Blockdaemon for more information about the performance of these scaling methods.

Replicated TSM

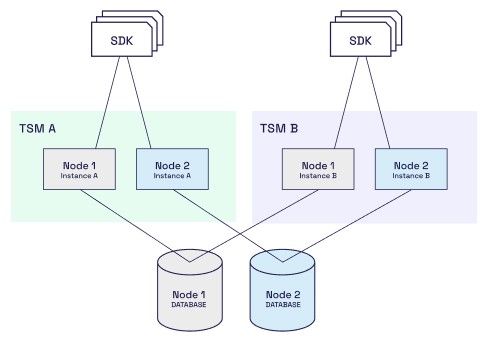

In this setup you deploy several identical TSMs, each TSM consisting of one set of MPC nodes. All instances of MPC Node 1 (one in each TSM) are configured to share the same database. Similarly, all instances of MPC Node 2 share the same database, and so forth. With this setup, a SDK can now use any of the TSMs.

As an example, the following figure shows a setup with two TSMs (TSM A and TSM B), each consisting of two MPC nodes. A number of SDKs are configured such that some of them connect to TSM A while the other SDKs connect to TSM B.

This is a simple way of improving throughput and/or providing failover. The application can spin up new TSMs if needed, and the SDKs can dynamically be configured to use a random TSM, or the TSM with the most free ressources, etc.

In this setup, however, it is up to your application to figure out which TSM a given SDK should use. To increase performance, you have to add a complete TSM to the setup, i.e., you cannot just add one MPC node at a time. This may be difficult to coordinate if the TSM nodes are controlled by different organizations.

Replicated MPC Nodes with Direct Communication

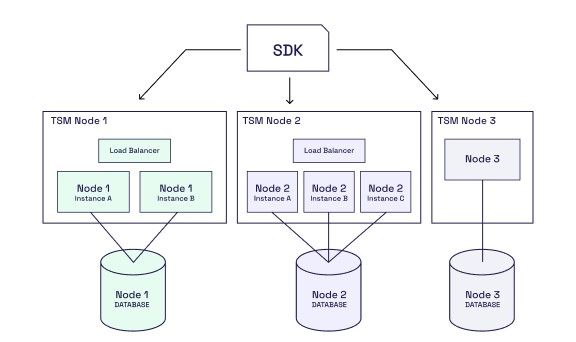

Alternatively, you can run multiple instances of a single MPC node behind a standard (round robin) load balancer such as HAProxy. Again, all the instances of a given MPC node share the same database. In this setup, all the instances of a given MPC node must be configured to route sessions to each other (see configuration example below).

The benefit of this configuration is that from the outside, i.e., seen from the SDK, there is only a single TSM. Also, the replication can be scaled up/down at each MPC node independently of how the other MPC nodes are scaled.

The following example shows a setup with a TSM consisting of three MPC nodes. Node 1 is configured with two node instances, and TSM Node 2 is configured with three node instances. Node 3 runs in the standard configuration, with a single node instance.

In the previous section, the MPC node instances had standard configuration, except that all instances of each node connected to the same database. However, if your MPC nodes are configured to communicate using direct connections, you need to include the following additional configuration to enable horizontal scaling with independent MPC node replication:

# This setting enables multiple instances of the same player to be placed behind a load balancer. Each instance will

# either handle sessions itself or route the traffic to other instances.

[MultiInstance]

# IP address where this instance can be reached from other the instances. If not specified an auto-detected address is

# used and this might not be the address you want if there are multiple IP addresses associated with the system.

Address = ""

# SDK port announced to the other nodes. If not specified it defaults to the SDK port from the [SDKServer] section.

SDKPort = 0

# MPC port announced to the other nodes. If not specified it defaults to the SDK port from the [MPCTCPServer] section.

MPCPort = 0

# How often should we run a cleanup job that purges old routing entries from the database.

CleanupInterval = "5m"

# Every CleanupInteval the cleanup job will run with this probability. 0 means never and 100 means always.

CleanupProbability = 25

Replicated MPC Nodes with Message Broker

If your Builder Vault MPC nodes are configured to communicate using a message broker, you can also scale up each MPC node individually. Doing so, you achieve the same benefits as with direct communication. Unlike with direct node-to-node communication, no additional configuration is needed for the MPC nodes, in addition to the configuration normally needed for the Message Broker setup.

Updated 5 days ago